EasyGoing – AR Smart Glasses

Creator

Evan Kenaz Lee

Connor Unsworth

Lauren Freeman

Deliverables

UI, UX, Video,

AR Smart Glasses

The Goal

The aim of our product EasyGoing, is to provide an easy-to-use and easy-to-wear technology that allows for more independence among the elderly and disabled.

EasyGoing allows them to get where they want to go using the easiest route as determined by other users and keeping them safe along the way.

The Solution

Our audience is aimed at an ageing community,who still wish to retain their independence. Our smart glasses are aimed to aid people in their everyday lives both within the house and ultimately outside of it we aim to open up towns and cities by making them elderly friendly, through this we will be able to create a community network with our companion app. Through the companion app we are help to help tackle loneliness by connecting people on similar routes so that they can form walking group or local events. As falls are a large worry as well there is a fall detection sensor within the glasses that acts as a life alert to combat the general avoidance of clunky life alert buttons.

While we initially set out to aid those with disabilities such a hearing and sight impairments our product is not solely limited to that audience as it has multiple general use purposes whilst retaining the initial accessibility features.

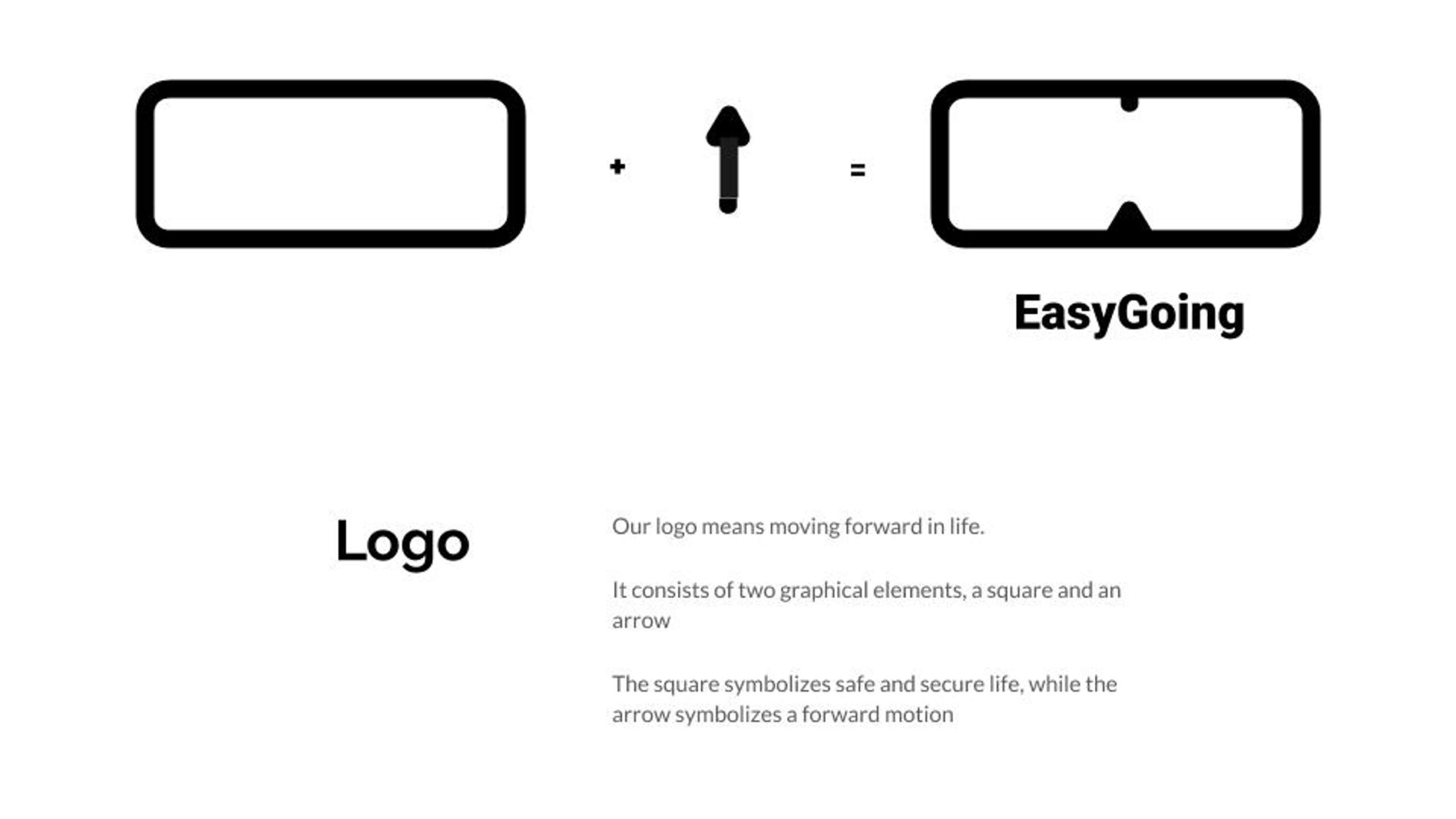

Branding

We try to keep our design as simple as possible, so that our product are not interfering or hampering the user during their everyday lives.

Our theme is minimalist with black and white color palette. The font of our interface is Futura, which is an elegant and modern, yet minimal typeface.

EasyGoing Glasses Features

Pathfinding

Uses user-generated data to determine the easiest and safest routes to the desired destination, users can set up certain preferences for their routes, taking into account aspects such as the quality of the road, the elevation and how busy it is. It will also offer alternative routes that will be faster but may be less accessible based on the users preferences.

Sound Indicator

The glasses use inbuilt microphones to detect nearby sounds. By using AI to determine the distance and the source, it shows this information on the display and can be used to alert deaf or hard of hearing users to things such as approaching vehicles or people passing by them. The further the sound is from, the smaller and more transparent the icon of that particular object will be.

Conversation Captioning

Uses Speech Recognition and AI to generate captions for conversations, the sensitivity and radius of the microphone can be adjusted manually. Should the user be part of a larger group and want to talk to everyone or in a public place and just want to talk with one person. The glasses can also connect directly to other glasses, allowing for easier conversations between users.